Decentralised, Open-Source Pathways to Trusting Our(my) AI Agents

digital constellation: First Person Network, BGIN, DIF, Kwaai

Meet the First Person Network

The First Person Network emerged from a collaboration between Linux Foundation Decentralised Trust, Trust Over IP Foundation, Decentralized Identity Foundation, and OpenWallet Foundation to solve a foundational internet problem: how do we prove someone is a real, unique person with authentic relationships in a world where AI can perfectly impersonate humans?

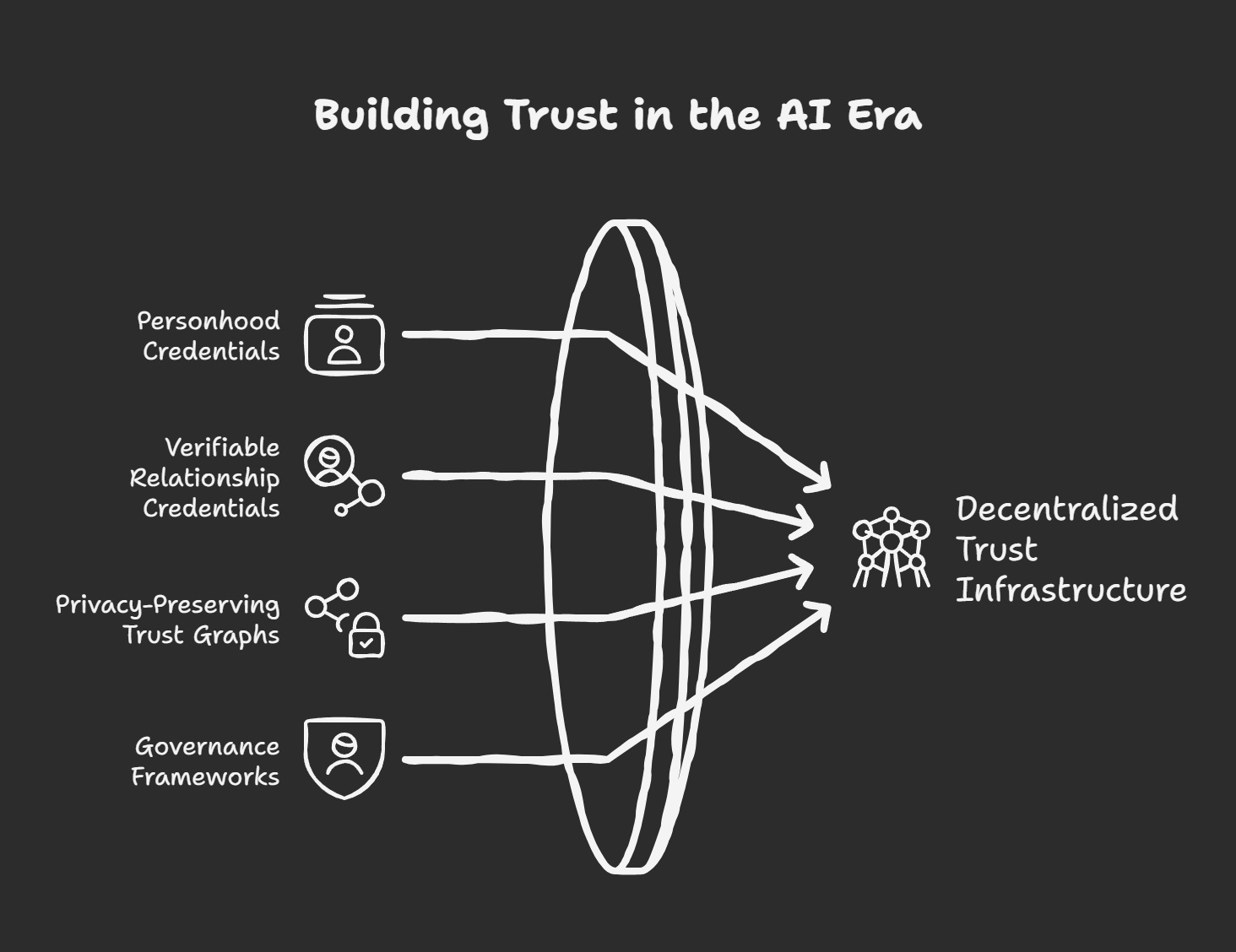

Decentralised trust infrastructure built on two credential types. Key ceremonies to connect them.

Personhood Credentials and Verifiable Relationship Credentials.

Form privacy-preserving trust graphs without centralised databases or global biometric surveillance. The network provides not just proof of personhood, but the governance frameworks needed for individuals to safely delegate authority to personal AI agents.

This isn’t a single vendor solution, facilitation, event or a theoretical framework.

It’s a multi-stakeholder, open-source approach to building the trust layer the internet has always needed, now becoming urgent as autonomous AI agents act on our behalf across digital systems.

When AI can perfectly impersonate humans and autonomous agents are acting on our behalf across the digital landscape, one question becomes urgent: how do we prove someone is real, unique, and authorised to act?

I recently contributed to the First Person Network whitepaper, an 80-page technical document that addresses one of the internet’s foundational challenges: privacy-preserving proof of personhood. This work extends beyond human verification to establish the trust infrastructure necessary for a world where AI agents operate on behalf of individuals rather than merely alongside them.

The Problem: Trust is Eroding Fast

The XZ backdoor incident nearly resulted in one of the most severe malware injections in open source infrastructure history. A sophisticated threat actor invested two years constructing a fabricated contributor identity to obtain maintainer privileges on critical Linux utilities. Generative AI systems now produce synthetic media that exceeds human detection thresholds. As we increasingly delegate decision-making to autonomous agents, a fundamental question emerges: who do these agents actually represent?

The Solution: Decentralised Trust Graphs

The First Person Project introduces two cryptographic primitives:

Personhood Credentials attest to an individual’s unique existence within a specific ecosystem. Issued by trusted institutions including universities, employers, communities, or government entities.

Verifiable Relationship Credentials are exchanged peer-to-peer between credential holders, establishing an attestable network of authentic trust relationships.

These credentials combine to form a decentralised trust graph, eliminating centralised databases and global biometric surveillance while maintaining privacy-preserving verification of identity and authentic relationships.

Why This Matters for Private AI

This research connects directly to my ongoing work across the decentralized identity, private AI and blockchain governance domains, including Kwaai’s Personal AI Operating System architecture centered on self-sovereign identity principles, and Agent Kyra’s exploration of sovereign AI agent networks.

The whitepaper dedicates substantial analysis to First Person Certified AI agents, autonomous systems that demonstrably operate on behalf of verified individuals with cryptographically authenticated authority.

The proposed infrastructure includes:

Decentralised identifiers (DIDs) for both human principals and their delegated agents

Personal private communication channels enabling secure agent interaction

Governance frameworks defining explicit agent authority boundaries

Zero-knowledge proof systems preserving privacy while establishing trust

In his 2025 keynote, Linux Foundation Executive Director Jim Zemlin described how First Person credentials could address open source supply chain security challenges, using the XZ backdoor incident as a case study. This framework represents the necessary infrastructure for environments where human-AI collaboration requires verifiable delegation.

^ listen to this pls

BGIN’ing the Multi-Agent Framework

While these architectural patterns provide the theoretical foundation, I’m currently translating these concepts into executable systems through the BGIN AI Multi-Agent Framework.

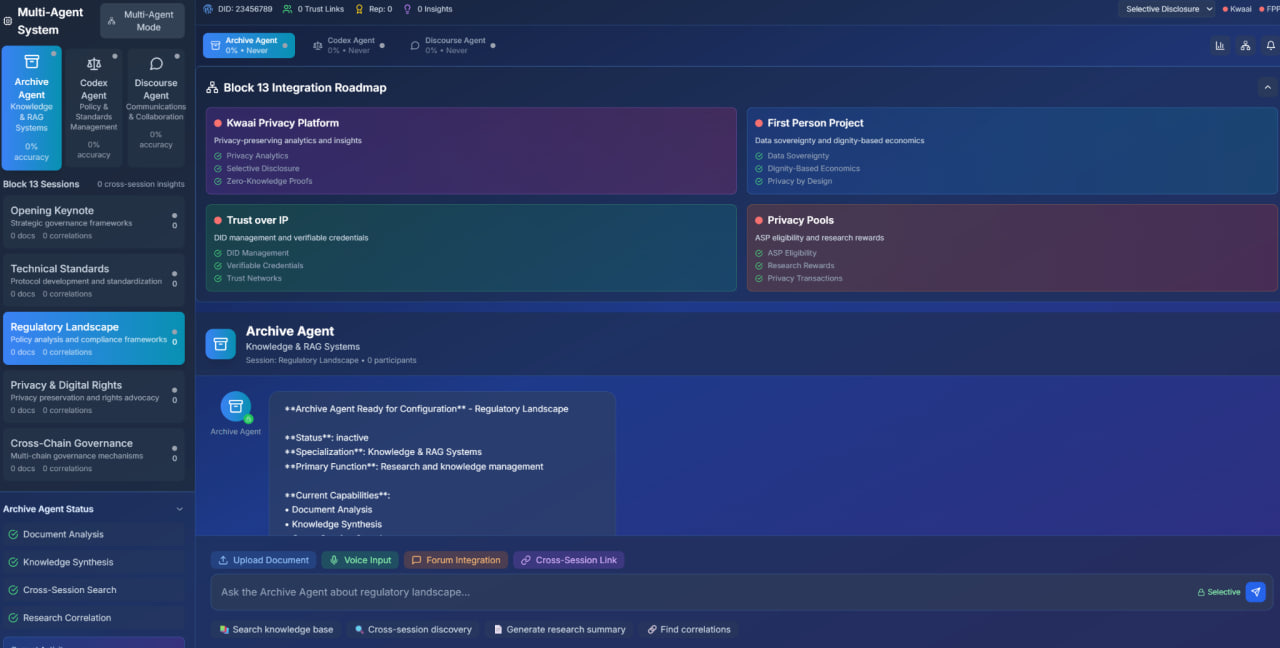

A privacy-preserving research platform is being developed for and throughout BGIN Block 13 in Washington D.C. this October.

This implementation aims to embody the distributed consciousness architecture central to trustworthy agent systems. Rather than a single monolithic AI, the framework will coordinate three specialised agents, each with defined authority boundaries and cryptographically verifiable capabilities:

🤖 BGIN AI Three-Agent System

Archive Agent - Knowledge & RAG Systems

- Document analysis and knowledge synthesis

- Cross-session search and retrieval

- Privacy-preserving knowledge management

- Distributed consciousness architecture

- Kwaai Integration: Privacy-preserving analytics and insights

- FPP Compliance: Data sovereignty and user-controlled research data

- ToIP Framework: DID-based identity and verifiable credentials

- Privacy Pools: ASP eligibility for research contributions

Codex Agent - Policy & Standards Management

- Policy analysis and standards development

- Compliance checking and verification

- Real-time sovereignty enforcement

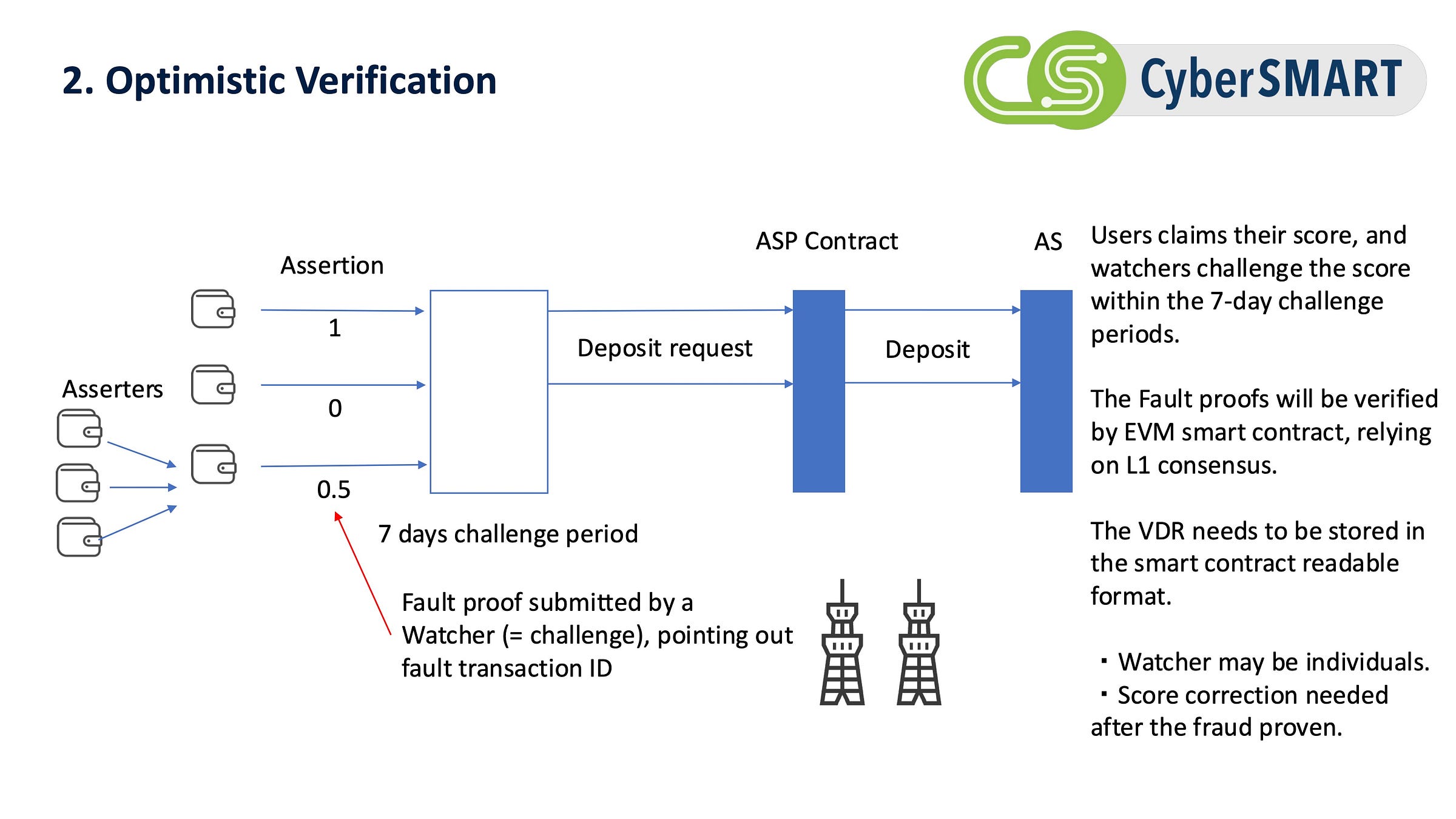

- Cryptoeconomic verification systems

- FPP Integration: Dignity-based governance and policy analysis

- ToIP Framework: Policy analysis credentials and trust protocols

- Privacy Pools: Trust-based policy compliance verification

Discourse Agent - Communications & Collaboration

- BGIN Discourse community integration

- Forum integration and community management

- Consensus building and collaboration tools

- Privacy-preserving communication channels

- Trust network visualization

- FPP Integration: Dignity-based community building and consensus

- ToIP Framework: Trust network establishment and management

- Privacy Pools: Community-driven ASP qualification process

Each agent will operate within explicit authority boundaries defined through verifiable credentials, exactly as the First Person Network whitepaper proposes. The system will implement configurable privacy levels, from maximum privacy with complete anonymity to selective disclosure for specific research collaborations, giving users granular control over their trust relationships and data sharing.

Privacy Pools & Accountable Wallets - both identity and money primitives combined

The platform aims to integrate Privacy Pools’ Association Set Provider model, where research contributions could earn reputation-based access to enhanced features through zero-knowledge proofs. This creates dignity-based economics: users receive fair value for their contributions without sacrificing privacy or sovereignty.

This allows interoperability between;

value as money and value as information.

people sometimes ask me why I’m putting privacy pools into an identity system. ‘thats a financial thing right not identity thing’.

Well in my opinion value as money and value as information is converging, they are one in the same really, and AI doesnt really care both are just data on ledgers or in db’s to them. Tokens on blockchains is a good way to represent the transfers of this.

the more intelligence you share, the more you’ll want it private, similar to how more money more privacy is important. so we can learn and use the same system, the agents will thank us later when they mix the two.

I recently presented research at EDCON on “Accountable Wallets,” and Improving Privacy Pools with Kigen Fukuda,

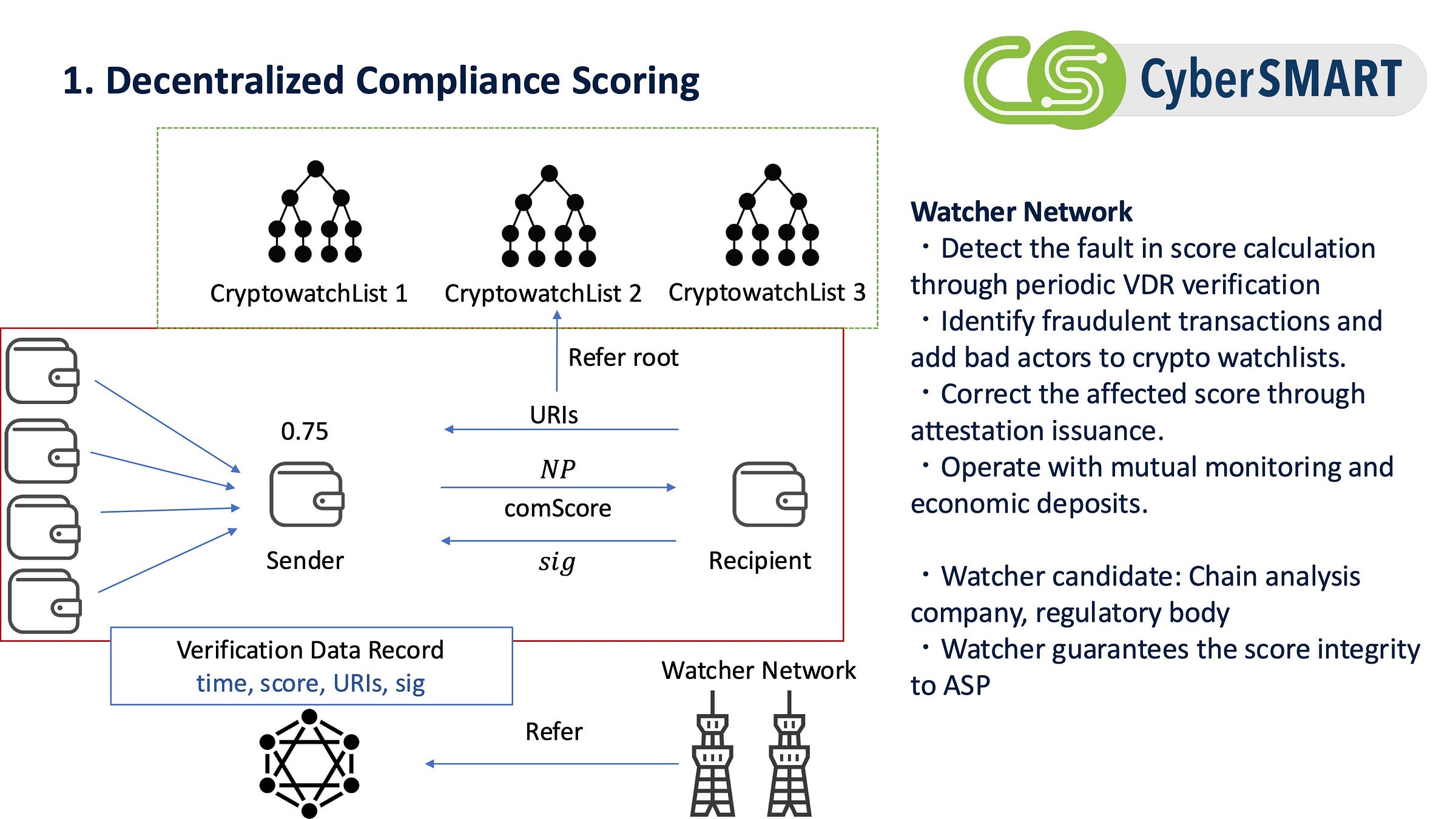

An improvement on the concept that extends Privacy Pools’ vision by constructing association sets based on wallet credibility scores through decentralised mechanisms.

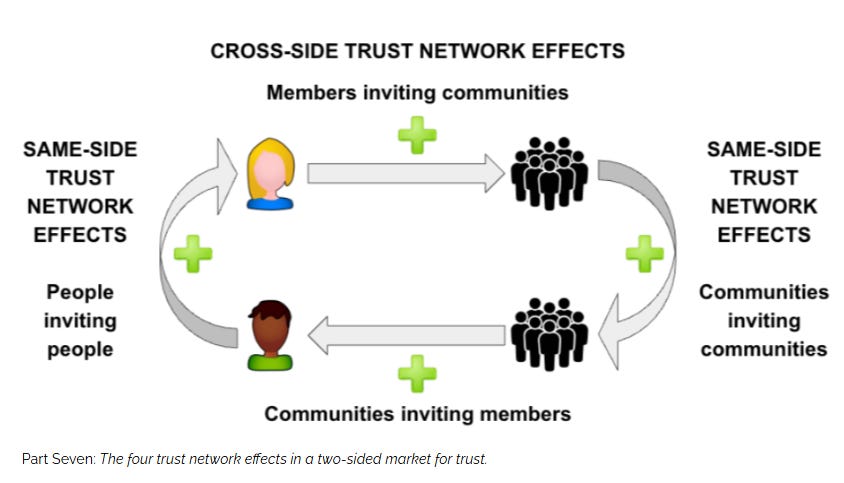

A Critical Distinction: Authority vs. Social Composability

Two paradigms emerge for establishing trust within association sets: issuer-as-authority and issuer-as-socially-composable. The former positions centralised entities as gatekeepers, assigning credibility scores top-down. The latter enables peer-to-peer attestations that compose into emergent trust graphs, where credibility derives from verifiable relationships rather than institutional decree.

Both approaches warrant exploration. Centralised issuers provide efficiency and regulatory compatibility, while socially composable proofs align more authentically with dignity-based economics and user sovereignty. Yet composable social proofs represent the more compelling pathway: they distribute power rather than concentrate it, enable contextual trust rather than universal scoring, and preserve the agency inherent to decentralised identity systems. Top-down score systems risk replicating the surveillance capitalism we seek to transcend.

Privacy Pools successfully balance on-chain privacy with regulatory compliance, but rely on a critical assumption: the existence of association sets containing only legitimate deposits. Our approach preserves Privacy Pools’ privacy guarantees while minimising trust assumptions and single points of failure through academically analysed security properties, ensuring robust, trustless operation.

This work connects directly to the BGIN AI Multi-Agent Framework’s governance model. Both implementations demonstrate how cryptographic proofs and verifiable credentials can establish trust without a centralised authority.

Just as Accountable Wallet uses social and formal credibility scores (ideally composed from social attestations rather than imposed hierarchically) to construct trustworthy association sets, the multi-agent framework uses verifiable credentials to establish agent authority and researcher reputation within privacy-preserving boundaries.

And also the BGIN AI Agent Framework (from the workshops I hosted at BGIN ETHTokyo Meetup)

Block 13 Integration Technologies

Kwaai Privacy Platform

- Privacy-Preserving Analytics: Advanced data analysis with privacy protection

- Selective Disclosure Protocols: Granular control over data sharing

- Zero-Knowledge Proofs: Cryptographic proofs without data revelation

- Privacy-First Architecture: Built-in privacy controls and anonymization

First Person Project (FPP)

- Data Sovereignty: User-controlled data and digital identity

- Dignity-Based Economics: Fair value distribution and user agency

- Privacy by Design: Privacy built into system architecture

- Transparent Governance: Open and accountable decision-making

Trust over IP (ToIP) Framework

- Decentralized Identifiers (DIDs): Agent identity management

- Verifiable Credentials: Cryptographic proof of capabilities

- Trust Networks: Reputation and relationship management

- Interoperability: Standards-compliant agent interactions

Privacy Pools

- Association Set Provider (ASP): Trust-based deposit approval

- Research Contribution Economics: Financial incentives for quality research

- Privacy-Preserving Transactions: Zero-knowledge proof integration

- Trust Network Economics: Reputation-based access to enhanced features

Trust networks for value transfers.

Trust scores for cybersecurity.

Trusted agents for intelligence transfers.

powers combined

The convergence of these approaches, privacy-preserving financial transactions through Privacy Pools, credibility-based trust through Accountable Wallet, and agent authority delegation through the BGIN framework, illustrates how a decentralised trust infrastructure can function across multiple domains while maintaining consistent privacy guarantees and user sovereignty.

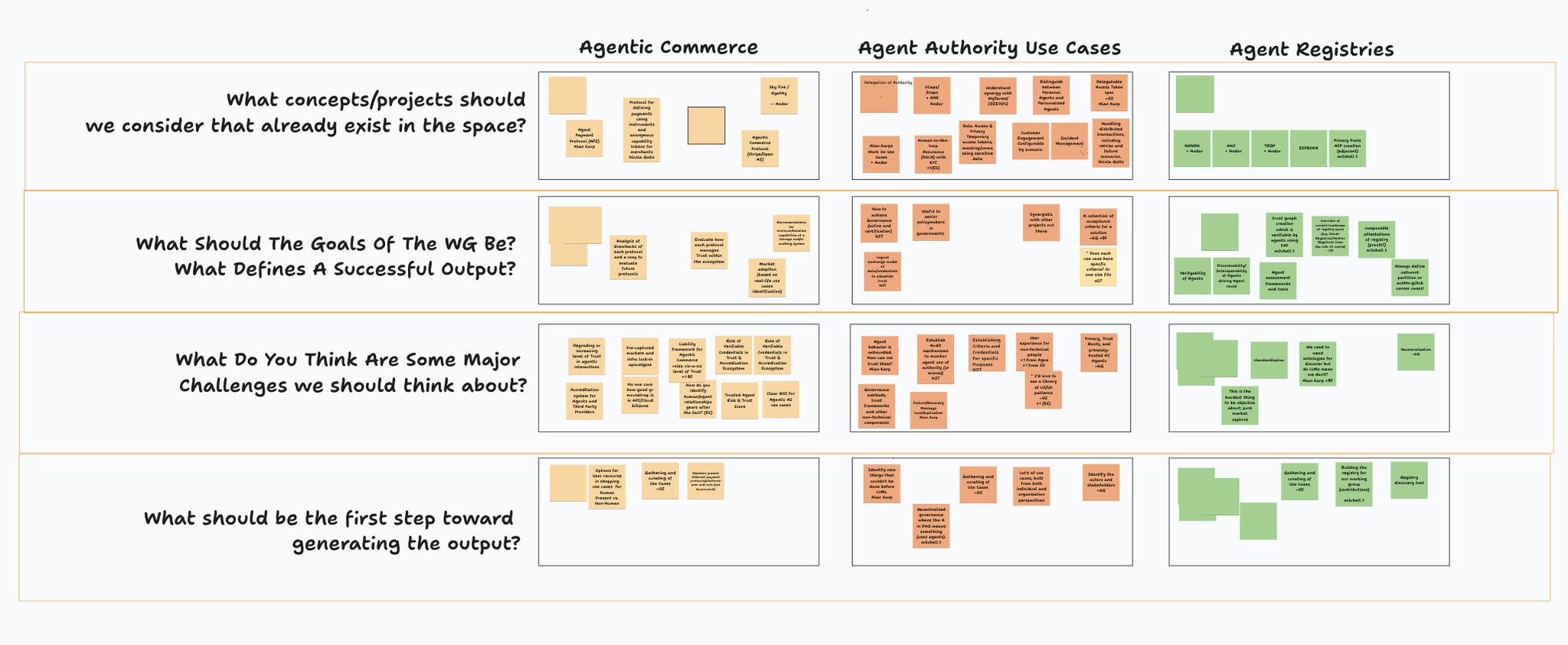

DIF Trusted Agents

The DIF Trusted Agents Working Group plays a critical role in this effort. Current focus areas include agentic authority models, agentic registry architectures, and agent-mediated commerce protocols.

Our first meeting was a couple of days ago, and the importance of this collaboration and work was echoed by all participants. I suspect clarity and an agent overlay will emerge, covering essential features for first-person verification, comprehensive systems and engineering for agent authority delegation, governance implementation, and accountability mechanisms.

The BGIN AI Multi-Agent Framework aims to serve as a reference implementation for many of these concepts, demonstrating how DID-based identity management, verifiable credentials, and trust network protocols could function in production environments. As DIF develops standards and common understanding for agent registries, authority models and commerce (to start with), we hope to validate these patterns through actual blockchain governance research, creating feedback loops between standards development and practical implementation.

What about Ethereum and agentic value on-chain?

Standards proposals such as EIP-8004 for Trustless Agents demonstrate how these theoretical constructs translate into concrete protocol specifications. EIP-8004 extends the Agent-to-Agent protocol with on-chain registries supporting identity, reputation, and validation, creating portable, censorship-resistant agent identifiers and establishing trust models spanning reputation-based attestations, crypto-economic validation mechanisms, and Trusted Execution Environment proofs.

The BGIN AI Agents aim to implement these patterns through their agent registry architecture, where each agent would maintain a DID-based identity with verifiable credentials attesting to its capabilities. When Archive Agent queries research documents or Codex Agent analyses policy frameworks, these actions would occur through cryptographically authenticated authority chains, demonstrating how EIP-8004’s vision could translate into working systems.

This represents composable infrastructure enabling trust layers that function across organisational boundaries, supporting pluggable verification mechanisms and facilitating an open agent economy rather than proprietary platform silos.

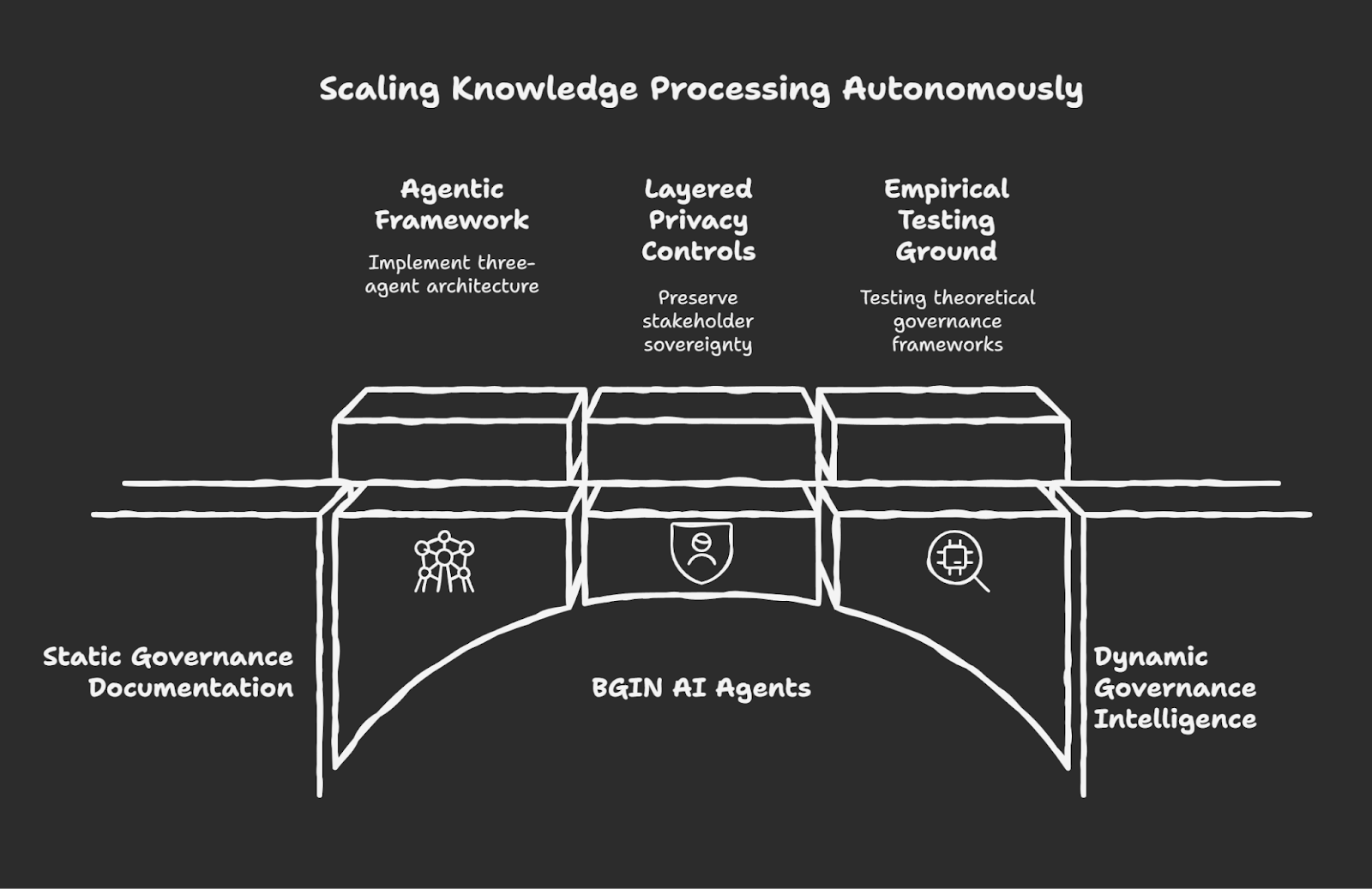

Governance Intelligence: BGIN’ing a Connection

This research builds on work conducted through BGIN’s Identity, Key Management & Privacy (IKP) Working Group, where we’ve been developing an Agentic Framework that implements the three-agent architecture now being built into the BGIN AI Multi-Agent Framework.

The core challenge is clear: autonomous agents must transform static governance documentation into dynamic governance intelligence while preserving stakeholder sovereignty. Whether we’re examining governance agents processing blockchain policy frameworks or personal AI agents executing delegated tasks, the fundamental question remains the same,

How do we scale knowledge processing and decision-making through automated systems without compromising human autonomy?

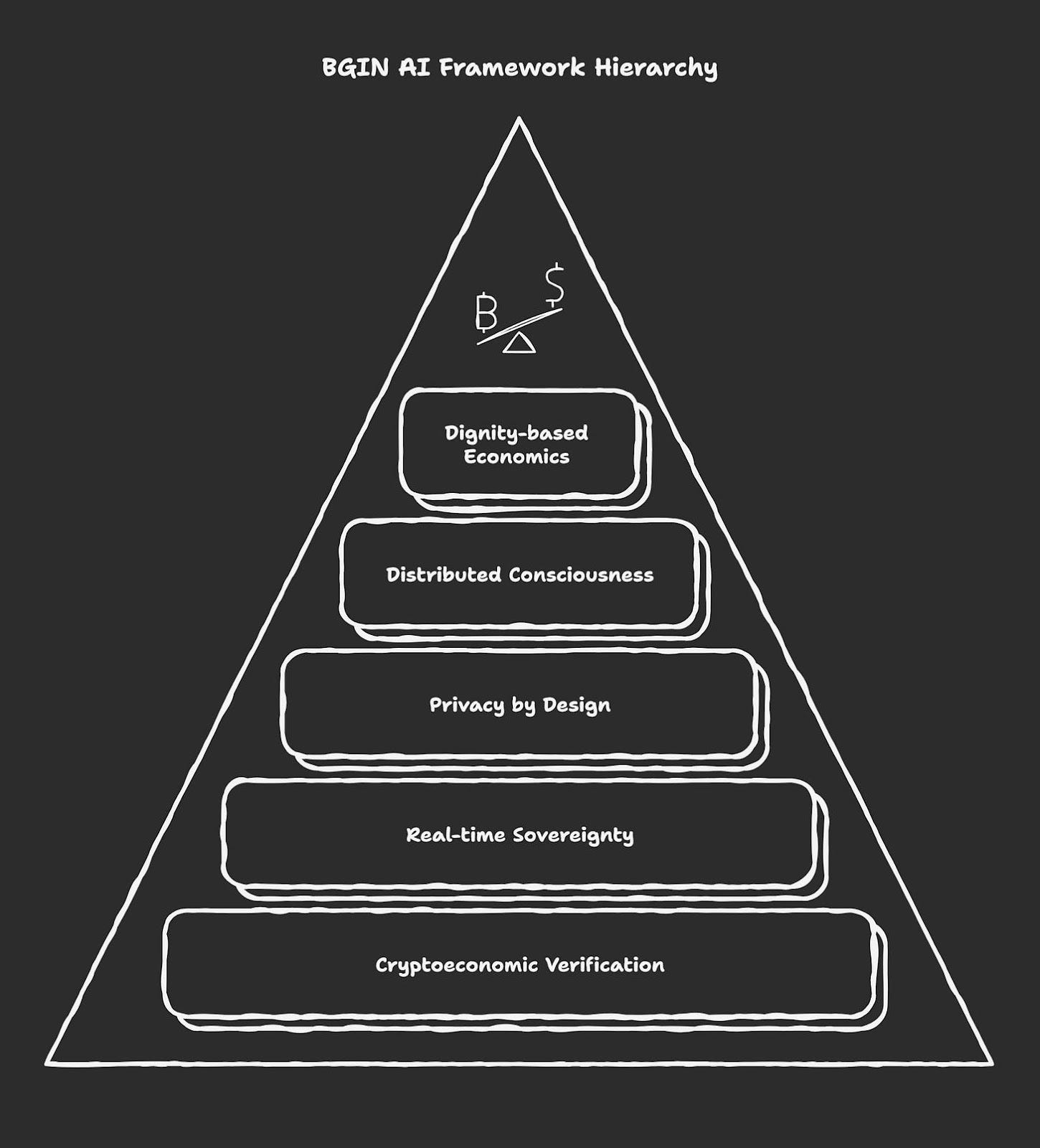

BGIN AI Agents aim to provide an empirical testing ground for these theoretical frameworks. The system implements this vision through layered privacy and sovereignty controls:

Privacy & Sovereignty Architecture

Based on the BGIN Agentic Framework:

Distributed Consciousness: Multi-agent coordination with privacy preservation

Privacy by Design: Built-in privacy controls and data protection

Dignity-based Economics: Fair value distribution and user sovereignty

Real-time Sovereignty Enforcement: Continuous monitoring of privacy compliance

Cryptoeconomic Verification: Blockchain-based trust and verification systems

Real-time sovereignty enforcement monitors every agent interaction, ensuring privacy compliance, while trust network visualisation reveals the emergent relationships between anonymous researchers. The system’s distributed consciousness architecture seeks to prove that agents can coordinate sophisticated research activities, cross-session synthesis, policy analysis, and community consensus building, without requiring centralised control or surveillance infrastructure.

Bridging Theory and Practice

Trust graphs emerge from identity theory. Verifiable AI emerges from cryptography. Together, these represent the theoretical foundations enabling trustworthy agent systems. Trust graphs demonstrate how identity relationships construct a verifiable social substrate, while cryptographic systems provide mathematical mechanisms to prove agent authority and authenticity without compromising privacy guarantees.

BGIN is uniquely positioned to conduct experiments at this theoretical intersection. As a neutral, multi-stakeholder governance and standards-setting organisation, it provides the empirical environment necessary to validate how these theoretical constructs perform in practice. Developing standards for blockchain governance requires frameworks that verify cryptographic proofs genuinely preserve sovereignty, that trust graphs accurately represent stakeholder relationships, and that governance frameworks scale without introducing centralisation.

Convergence in Action

BGIN’s methodology applies cryptographic consensus to maintain trust in governance processes. The First Person Network applies verifiable credentials to maintain trust in human-agent relationships. The BGIN AI Multi-Agent Framework demonstrates how both approaches converge, implementing decentralised trust infrastructure grounded in identity theory and cryptographic verification, tested through real governance research and community collaboration.

For those interested in contributing to this research agenda, BGIN Block 13 (October 15-17, 2025, Washington D.C.) will feature a BGIN Agent Hack translating policy discussions into executable implementations through agent-mediated standards and programmable governance frameworks. The BGIN AI Agents development will be discussed throughout the sessions, offering opportunities to shape and contribute to privacy-preserving agentic systems.

From Proof of Personhood to Data Dignity

The compelling aspect of this project extends beyond technical architecture to its governance model.

“of the people, by the people, for the people.” Kwaai AI

Structured as neither an extractive platform nor a surveillance infrastructure, but rather as a network cooperative generating genuine value for individual participants and trust communities.

The BGIN AI Agents seek to demonstrate this pathway from proof of personhood to data dignity in practice. Researchers would maintain complete control over their credentials and data. They could delegate agent authority according to their specific needs, granting Archive Agent access to research documents for synthesis while keeping personal communications private, or allowing Codex Agent to analyse policy frameworks without exposing underlying research data. They would participate in governance structures through the Discourse Agent’s consensus-building tools, contributing to standards development while preserving their anonymity or selectively disclosing their expertise through verifiable credentials.

This framework demonstrates how infrastructure can serve human flourishing rather than platform monopolisation. The integration of Kwaai’s privacy platform, First Person Project’s dignity-based systems, Trust Over IP’s governance frameworks, and Privacy Pools’ cryptoeconomic incentives creates a comprehensive stack where technical capability and ethical governance could align.

Building Blocks

The future trajectory isn’t humans versus AI, but rather humans with AI agents they can verifiably trust, agents carrying authenticated authority, respecting privacy boundaries, and operating within transparent governance frameworks.

The building blocks exist. Standards bodies are collaborating. Implementation is underway.

The BGIN AI Multi-Agent Framework (GitHub) (released at Block 13),

Hopefully, the start of how distributed consciousness, privacy-preserving analytics, dignity-based economics, and real-time sovereignty enforcement could function as an integrated system. This represents one node in an emerging network of privacy-preserving agentic platforms: each implementing shared standards, each respecting user sovereignty, each contributing to the open agent economy the First Person Network envisions.

Here is a sneak peek of the interface im vibing out at the moment for this - help!

I anticipate most of the code will be open source, as ive used mostly open source repos to build it. This interface uses OpenWebUI to start with. Ideally, this can just be attached to trust network, and you read and prompt it how and where you’d like.

The standards are being developed through multi-stakeholder processes. The governance frameworks prioritise dignity and sovereignty over extraction and surveillance.

What remains is continuing to do the building, testing, and open collaboration across the communities developing this infrastructure, from Linux Foundation and Trust Over IP to DIF, BGIN, Kwaai, among others and inviting the broader blockchain ecosystem. joinus….

pathing trust across the internet of value

connecting constellations in the digital dark

Links

BGIN AI Multi-Agent Framework (GitHub) (released at Block 13)